![[Put logo here]](media/logo.jpg)

Modelling meaning: a short history of text markup

Lou Burnard

A naive realist's manifesto

Modelling matters

A very long time ago

Let's start in the unfamiliar world of the mid-1980s...

- the world wide web did not exist

- the tunnel beneath the English Channel was still being built

- a state called the Soviet Union had just launched a space station called Mir

- serious computing was done on mainframes

- the world was managing nicely without the DVD, the mobile phone, cable tv, or Microsoft Word

...but also a familiar one

- corpus linguistics and ‘artificial intelligence’ had created a demand for large scale textual resources in academia and beyond

- advances in text processing were beginning to affect lexicography and document management systems (e.g. TeX, Scribe, (S)GML ...)

- the Internet existed for academics and for the military; theories about how to use it ‘hypertextually’ abounded

- books, articles, and even courses in something called "Computing in the Humanities" were beginning to appear

Modelling the data vs modelling the text

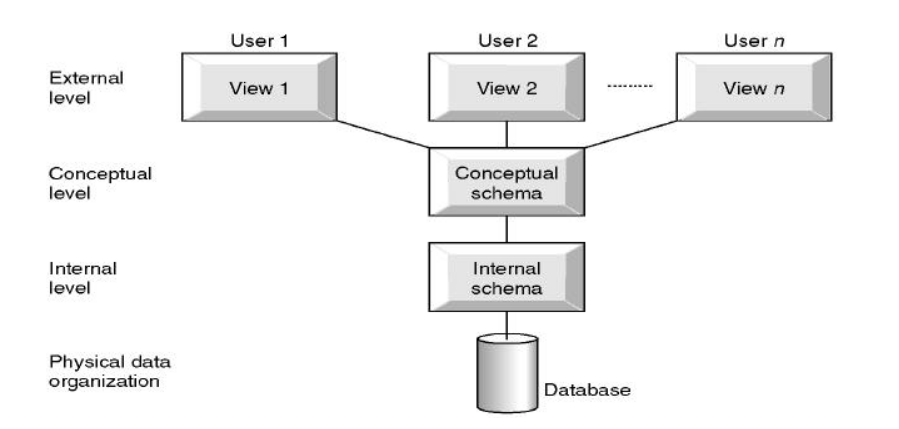

By the end of the 1970s, methods variously called ‘data modelling’, ‘conceptual analysis’, ‘database design’ vel sim. had become common practice.

- remember: a centralised mainframe world dominated by IBM

- spread of office automation and consequent data integration

- ANSI SPARC three level model

An inherently reductive process

how applicable are such methods to the complexity of historical data sources?

The 1980s were a period of technological enthusiasm

- Digital methods and digital resources, despite their perceived strangeness were increasingly evident in the Humanities

- There was some public funding of infrastructural activities, both at national and European levels: in the UK, for example, the Computers in Teaching Initiative and the Arts and Humanities Data Service

- Something radically new, or just an update ?

- Humanities Computing (aka Digital Humanities) gets a foothold, by establishing courses

Re-invention of quellenkritik

‘History that is not quantifiable cannot claim to be scientific’ (Le Roy Ladurie 1972)

- In the UK, a series of History and Computing (1986-1990) conferences showed historians already using commercial DBMS, data analysis tools developed for survey analysis, "personal database systems" ...

- In France, J-P Genet and others influenced by the Annales school proposed a programme of digitization of historical sources records

- Further pursued by Manfred Thaller with the program kleio (1982) -- a tool for transcribing and analysing (extracts from) historical sources, which included annotation of their content/significance

- Thaller also (in 1989) challenged advocates of Humanities Computing to define its underlying theory

Theorizing Humanities Computing

- What are the underlying principles of the tools used in Humanities Computing (then) or Digital Humanities (now)?

- Unsworth and others eventually (by 2002) start using the phrase ”scholarly primitives” to characterise a core set of procedures e.g.

- searching on the basis of externally-defined features

- analysis in terms of internally-defined features

- association according to shared readings

Isn't the modelling of textual data at the heart of all these?

Serious computing meets text

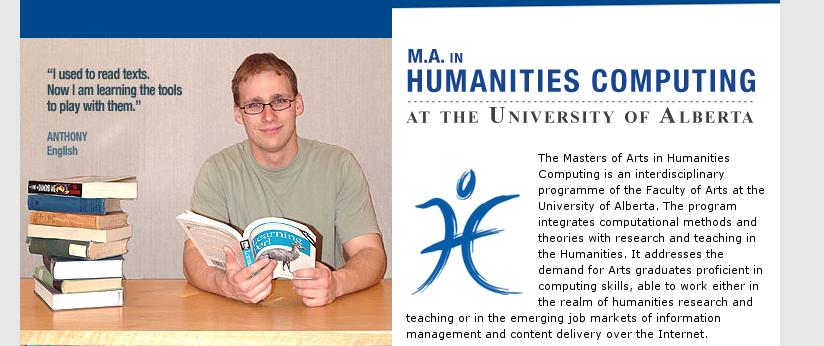

In interpreting text, the trained human brain operates quite successfully on three distinct levels; not surprisingly, three distinct types of computer software have evolved to mimic these capabilities.

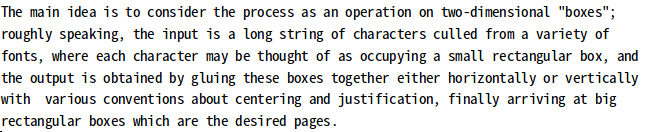

Text is little boxes

- TeX was developed by Donald Knuth, a Stanford mathematician, to produce high quality typeset output from annotated text

- Knuth also developed the associated idea of literate programming: that software and its documentation should be written and maintained as an integrated whole

- TeX is still widely used, particularly in the academic community: it is open source and there are several implementations

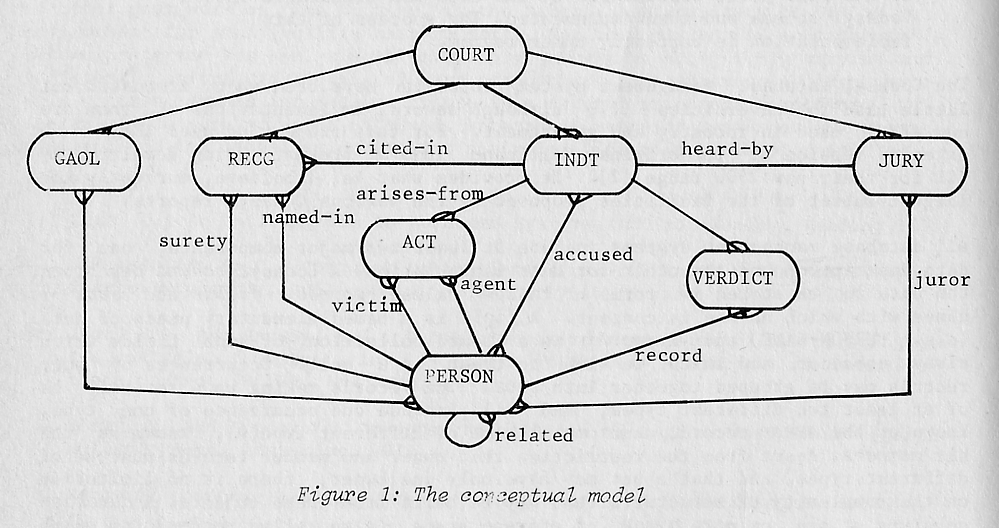

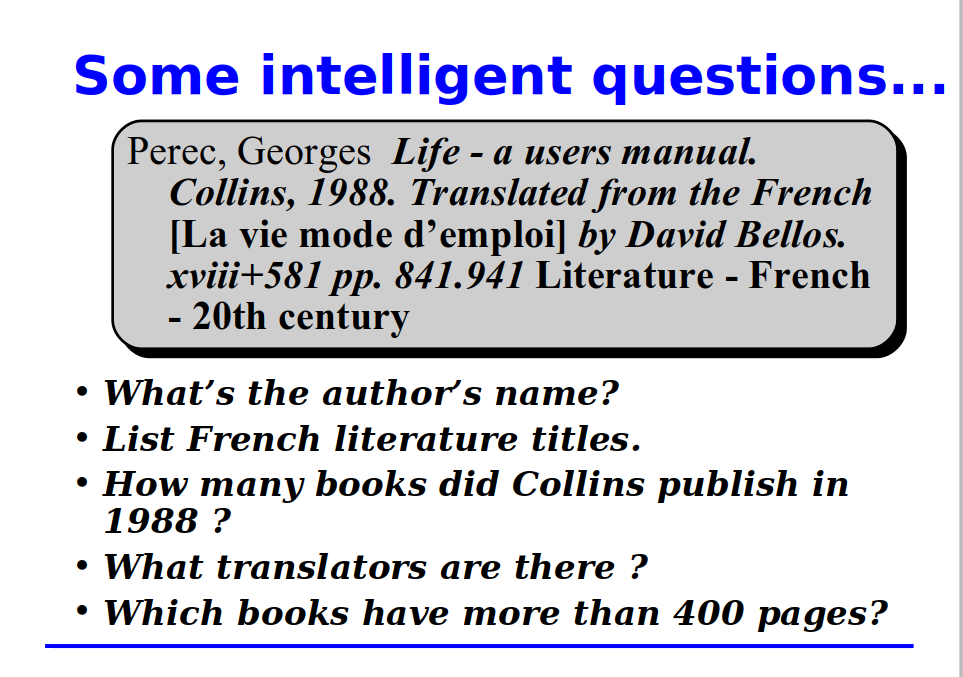

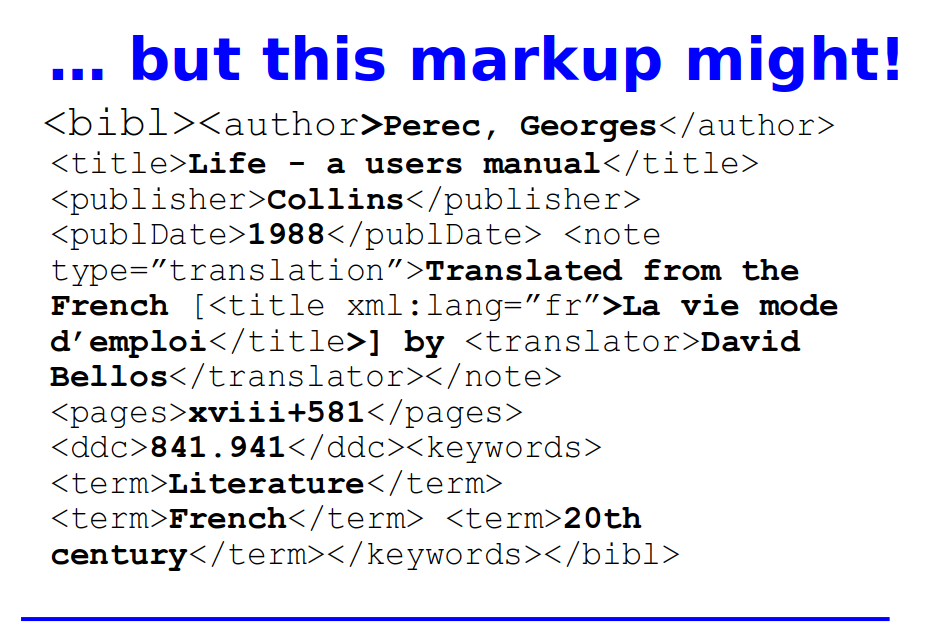

Database orthodoxy

- identify important entities which exist in the real world and the relationships amongst them

- formally define a conceptual model of that universe of discourse

- map the conceptual model to a storage model (network, relational, whatever...)

But what are the "important entities" we might wish to identify in a textual resource?

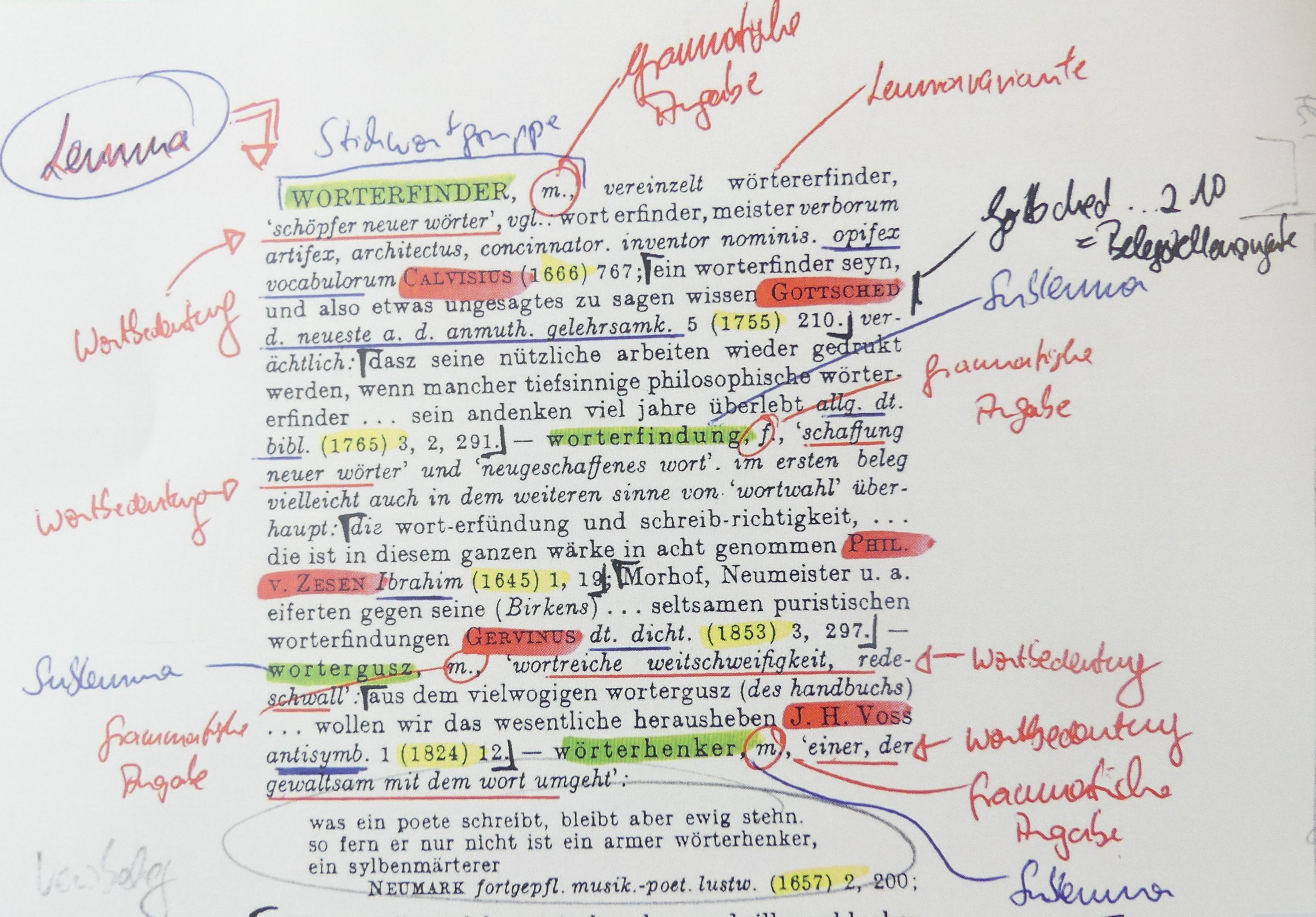

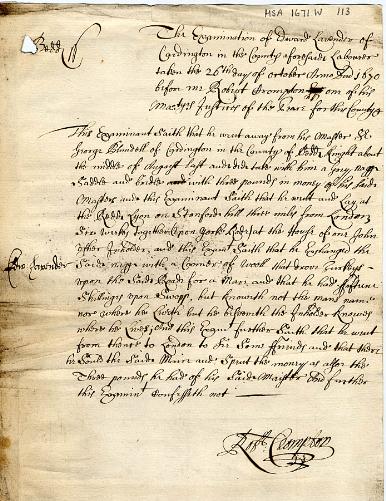

Assize court records, for example

Scribe

Scribe developed by Brian K Reid at Carnegie Mellon in the 1980s, was one of the earliest successful document production systems to separate content and format, and to use a formal document specification language. Its commercial exploitation was short-lived, but its ideas were very influential.

(S)GML

Charles Goldfarb and others developed a "Generalized Markup Language" for IBM, which subsequently became an ISO standard (ISO 8879: 1986)

SGML was designed to enable the sharing and long term preservation of machine-readable documents for use in large scale projects in government, the law, and industry. the military, and other industrial-scale publishing industries.

SGML is the ancestor of HTML and of XML ... it defined for a whole generation a new way of thinking about what text really is

Motivations

- an enormous increase in the quantity of technical documentation : the aircraft carrier story

- an enormous increase in its complexity : the Gare de Lyon story

- a proliferation of mutually incompatible document formats

- an almost evangelical desire for centrally-defined standards

- a mainframe-based, not yet distributed, world in transition

What is a text?

- content: the components (words, images etc). which make up a document

- structure: the organization and inter-relationship of those components

- presentation: how a document looks and what processes are applied to it

- .. and possibly many readings

Separating content, structure, and presentation means :

- the content can be re-used

- the structure can be formally validated

- the presentation can be customized for

- different media

- different audiences

- in short, the information can be uncoupled from its processing

This is not a new idea! But it's a good one...

Some ambitious claims ensued

A fuller example...

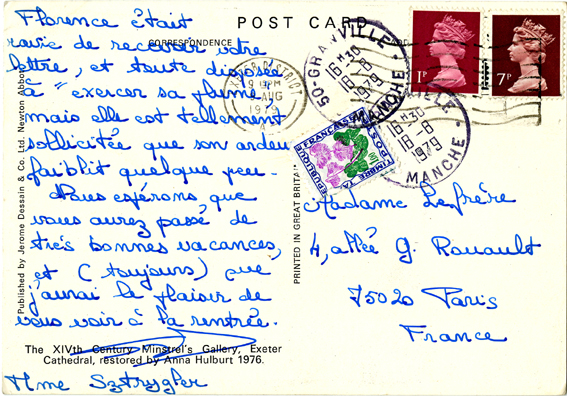

<carte n="0010">

<recto url="19800726_001r.jpg">

<desc>Vue d'un cours d'eau avec un pont en pierre et des

petites maisons de style mexicain. Un homme et une femme

navigue un pédalo en premier plan a gauche.</desc>

<head>San Antonio River</head>

</recto>

<verso url="19800726_001v.jpg">

<obliteration>

<lieu>El Paso TX 799</lieu>

<date>18-08-1980</date>

</obliteration>

<message>

<p>26 juill 80</p>

<p>Chère Madame , après New-York et Washington dont le

gigantisme m'a beaucoup séduite, nous avons commencé

notre conquête de l'Ouest par New Orleans, ville folle

en fête perpétuelle. Il fait une chaleur torride au

Texas mais le coca-cola permet de résister –

l'Amérique m'enchante ! Bientôt, le grand Canyon, le

Colorado et San Francisco... </p>

<p> En espérant que vous passez de bonnes vacances,

affectueusement. </p>

<signature> Sylvie </signature>

<signature>François </signature>

</message>

<destinataire>Madame Lefrère

4, allée George Rouault

75020 Paris

France

</destinataire>

</verso>

</carte>

A digital text may be ...

a ‘substitute’ (surrogate) simply representing the appearance of an existing document

... or it may be

a representation of its linguistic content and structure, with additional annotations about its meaning and context.

What does the markup do?

- It makes explicit to a processor how something should be processed.

- In the past, ‘markup’ was what told a typesetter how to deal with a manuscript

- Nowadays, it is what tells a computer program how to deal with a stream of textual data.

... and it also expresses the encoder's view of what matters in this document, thus determining how it can subsequently be analysed.

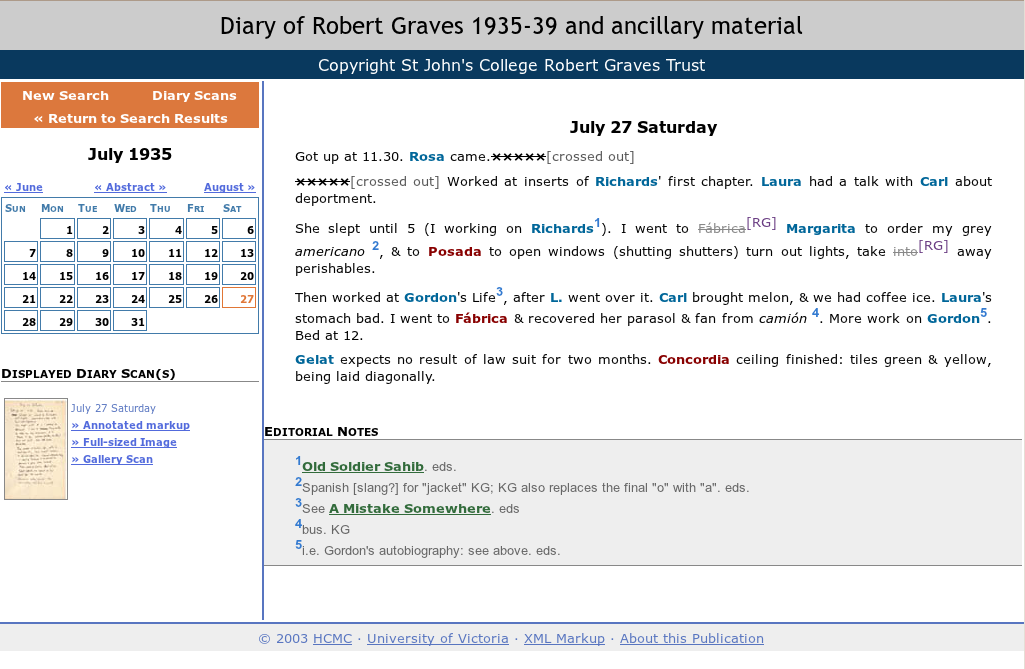

Where is the textual data and where is the markup?

Where is the textual data and where is the markup?

Which textual data matters ?

- the shape of the letters and their layout?

- the presumed creator of the writing?

- the (presumed) intentions of the creator?

- the stories we read into the writing?

A ‘document’ is something that exists in the world, which we can digitize.

A ‘text’ is an abstraction, created by or for a community of readers, which we can encode.

The document as ‘Text-Bearing Object’(TBO)

Materia appetit formam ut virum foemina

- Traditionally, we distinguish form and content

- In the same way, we might think of an inscription or a manuscript as the bearer or container or form instantiating an abstract notion -- a text

But don't forget... digital texts are also TBOs!

Markup is a scholarly activity

- The application of markup to a document is an intellectual activity

- Deciding exactly what markup to apply and why is much the same as editing a text

- Markup is rarely neutral, objective, or deterministic : interpretation is needed

- Because it obliges us to confront difficult ontological questions, markup can be considered a research activity in itself

- Good textual encoding is never as easy or quick as people would believe -- do things better, not necessarily quicker

- The markup scheme used for a project should result from a detailed analysis of the properties of the objects the project aims to use or create

Compare the markup

<hi rend="dropcap">H</hi>

<g ref="#wynn">W</g>ÆT WE GARDE <lb/>na in

gear-dagum þeod-cyninga <lb/>þrym gefrunon, hu ða æþelingas <lb/>ellen

fremedon. oft scyld scefing sceaþe

<add>na</add>

<lb/>þreatum, moneg<expan>um</expan> mægþum meodo-setl

<add>a</add>

<lb/>of<damage>

<desc>blot</desc>

</damage>teah ...

<lg>

<l>Hwæt! we Gar-dena in gear-dagum</l>

<l>þeod-cyninga þrym gefrunon,</l>

<l>hu ða æþelingas ellen fremedon,</l>

</lg>

<lg>

<l>Oft <persName>Scyld Scefing</persName>

sceaþena þreatum,</l>

<l>monegum mægþum meodo-setla ofteah;</l>

<l>egsode <orgName>Eorle</orgName>, syððan ærest wearþ</l>

<l>feasceaft funden...</l>

</lg>

... and

<s>

<w pos="interj" lemma="hwaet">Hwæt</w>

<w pos="pron" lemma="we">we</w>

<w pos="npl" lemma="gar-denum">Gar-dena</w>

<w pos="prep" lemma="in">in</w>

<w pos="npl" lemma="gear-dagum">gear-dagum</w> ...

</s>

or even

<w pos="npl" corresp="#w2">Gar-dena</w>

<w pos="prep" corresp="#w3">in</w>

<w pos="npl" corresp="#w4">gear-dagum</w>

<w xml:id="w2">armed danes</w>

<w xml:id="w3">in</w>

<w xml:id="w4">days of yore</w>

.. not to mention ...

<l>Oft <persName ref="https://en.wikipedia.org/wiki/Skj%C3%B6ldr">Scyld Scefing</persName>

sceaþena þreatum,</l>

or even

<l>Oft <persName ref="#skioldus">Scyld Scefing</persName>

sceaþena þreatum,</l>

<person xml:id="skioldus">

<persName source="#beowulf">Scyld Scefing</persName>

<persName xml:lang="lat">Skioldus</persName>

<persName xml:lang="non">Skjöld</persName>

<occupation>Legendary Norse King</occupation>

<ref target="https://en.wikipedia.org/wiki/Skj%C3%B6ldr">Wikipedia entry</ref>

</person>

Wait ...

- How many markup systems does the world need?

- One size fits all?

- Let a thousand flowers bloom?

- Roll your own!

- We've been here before...

- One construct and many views

- modularity and extensibility

... did someone mention the TEI ?

The Text Encoding Initiative

- Spring 1987: European workshops on standardisation of historical data (J.P. Genet, M. Thaller )

- Autumn 1987: In the US, the NEH funds an exploratory international workshop on the feasibility of defining "text encoding guidelines"

The obvious question

- So the TEI is very old!

- Not much in computing survives 5 years, never mind 20

- Why is it still here, and how has it survived?

- What relevance can it possibly have today?

- And with XML everyone can create their own markup system and still share data!

- And in the Semantic Web, XML systems will all understand each other's data!

- RDF can describe every kind of markup; SPARQL can search it!

Well .... maybe ....

Why the TEI?

The TEI provides

- a language-independent framework for defining markup languages

- a very simple consensus-based way of organizing and structuring textual (and other) resources...

- ... which can be enriched and personalized in highly idiosyncratic or specialised ways

- a very rich library of existing specialised components

- an integrated suite of standard stylesheets for delivering schemas and documentation in various languages and formats

- a large and active open source style user community

Relevance

Why would you want those things?

- because we need to interchange resources

- between people

- (increasingly) between machines

- because we need to integrate resources

- of different media types

- from different technical contexts

- because we need to preserve resources

- cryogenics is not the answer!

- we need to preserve metadata as well as data

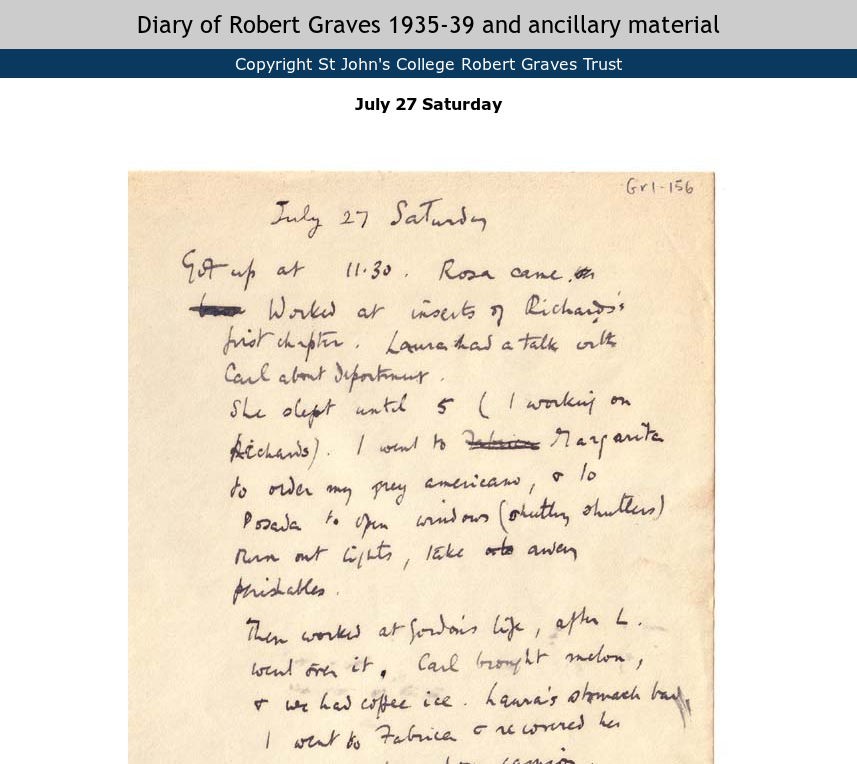

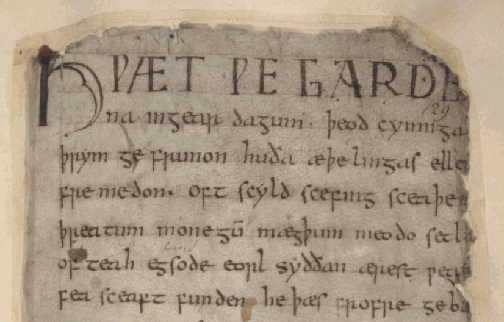

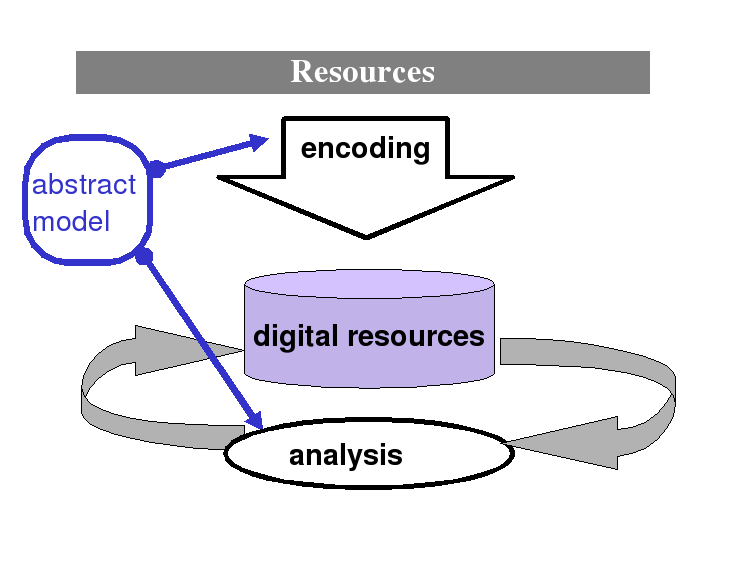

The virtuous circle of encoding

The scope of intelligent markup

The TEI provides -- amongst others -- recommended markup for

- basic structural and functional components of text

- diplomatic transcription, images, annotation

- links, correspondence, alignment

- data-like objects such as dates, times, places, persons, events (named entity recognition)

- meta-textual annotations (correction, deletion, etc)

- linguistic analysis at all levels

- contextual metadata of all kinds

- ... and so on and so on and so forth

Is it possible to delimit encyclopaedically all possible kinds of markup?

Why use a common framework ?

- re-usability and repurposing of resources

- modular software development

- lower training costs

- ‘frequently answered questions’ — common technical solutions for different application areas

The TEI was designed to support multiple views of the same resource

Conformance issues

A document is TEI Conformant if and only if it:

- is a well-formed XML document

- can be validated against a TEI Schema, that is, a schema derived from the TEI Guidelines

- conforms to the TEI Abstract Model

- uses the TEI Namespace (and other namespaces where relevant) correctly

- is documented by means of a TEI Conformant ODD file which refers to the TEI Guidelines

TEI conformance does not mean ‘Do what I do’, but rather ‘Explain what you do in terms I can understand’

Why is the TEI still here?

Because it is a model of textual data which is ...

- customisable,

- self-descriptive,

- and user-driven

![[Put logo here]](media/logo.jpg)

![[Put logo here]](media/logo.jpg)