![[Put logo here]](media/logo.png)

Modelling meaning: the role of data in the humanities

He who sees the Infinite in all things sees God. He who sees the Ratio

only, sees himself only

Lou Burnard

The brief

- What is the status of data in the humanities and social science research of our time?

- How has data, its encoding and markup, changed the way discourse studies perceive their subject?

- Does data driven discourse research change its role in society?

- How does data impact scientific understanding and what is its role in epistemological processes?

- What is the role of data in field work between experience, encounter, and interaction?

- What ethical implications arise in handling research data?

- In what direction will (and should) discourse research develop and what role will data play in it?

(Sorry, I won't answer all these questions)

The status of data in current SHS research

- Data is omnipresent, but not entirely omniscient

- Some disciplines are almost entirely data-dependent (e.g. corpus linguistics, stylometrics)

- In others (e.g. literary studies) data-dependence remains controversial

- The massive expansion of data availability has lead to a recognition of the centrality of data-modelling

- ... but there are two kinds of modelling :

- in the traditional humanities, a model is a means of abstraction and a set of categories: a reductive process

- in the social sciences, a model is a tool for prediction and generalisation : an analytic process

(see e.g. Flanders and Jannidis 2019)

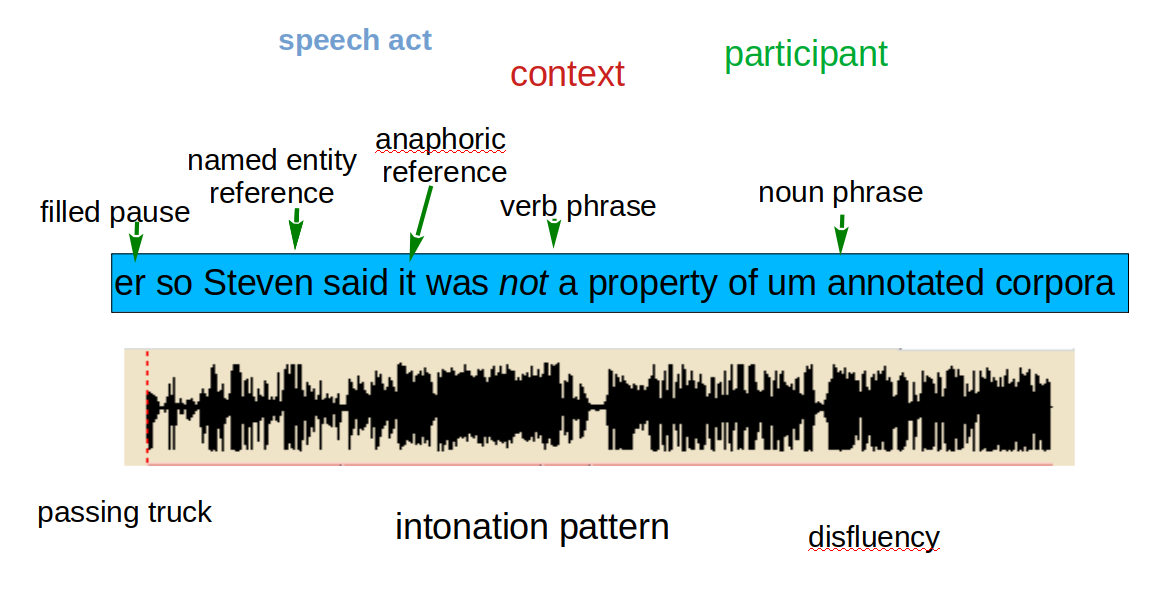

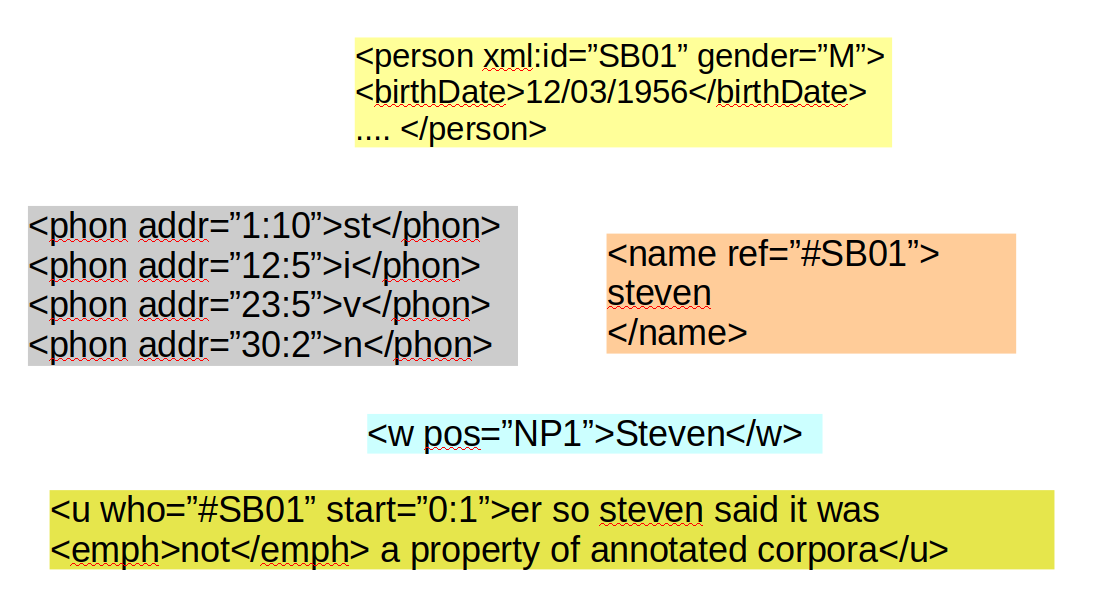

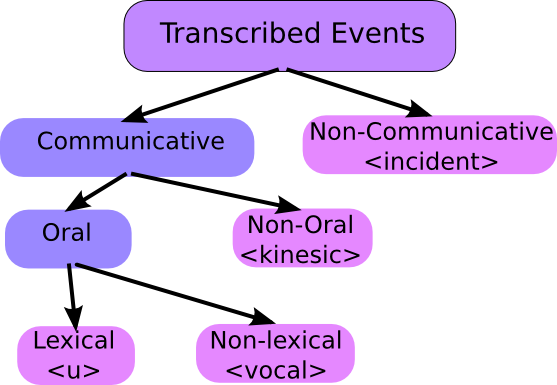

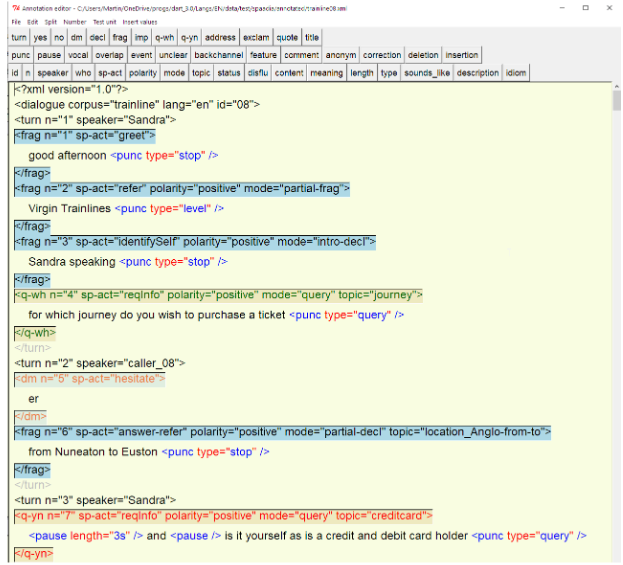

Encoding data for discourse studies

- (CAVEAT: I am not a discourse studies person)

- discourse components cross multiple levels

Which level/s of description do we favour?

Multiple levels can coexist in XML

But in practice, linguists seem to prefer fairly simple -- reductive -- data categorisations

A choice must be made...

Is there any such thing as a "pure" transcription?

A language corpus consists of samples of authentic language productions ...

- selected according to explicit principles, for specific goals

- represented in a digital form

- generally enriched with metadata and annotation beyond "pure" transcription

Can there be any re-presentation without interpretation?

Annotation: necessary evil or fundamental ?

‘Annotation ... is anathema to corpus-driven linguists.’ (Aarts, 2002)

‘The interspersing of tags in a language text is a perilous activity, because the text thereby loses integrity.’ (Sinclair, 2004)

‘… the categories used to annotate a corpus are typically determined before any corpus analysis is carried out, which in turn tends to limit ... the kind of question that usually is asked.’ (Hunston, 2002)

- transcription represents the transcriber's understanding of the source

- encoding/annotation represents the annotator's intuitions about it, in a codified form

- how are these different?

‘... all encoding interprets, all encoding mediates. There is no 'pure' reading experience to sully. We don't carry messages, we reproduce them –– a very different kind of involvement. We are not neutral; by encoding a written text we become part of the communicative act it represents. ’ (Caton 2000)

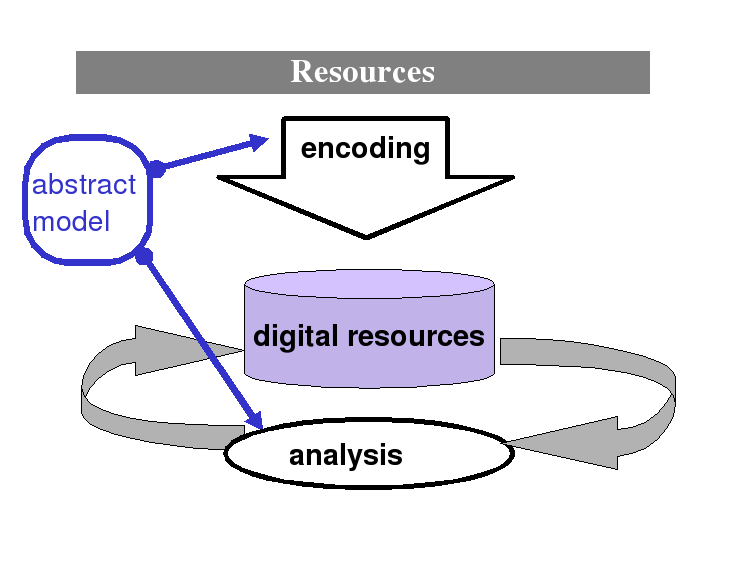

A naive realist's manifesto

How do we keep the virtuous hermeneutic circle turning?

Modelling matters

How did we get here from there?

Let's (briefly) go back to the unfamiliar world of the mid-1980s...

- the world wide web did not exist

- the tunnel beneath the English Channel was still being built

- a state called the Soviet Union had just launched a space station called Mir

- serious computing was done on mainframes

- the world was managing quite nicely without the DVD, the mobile phone, cable tv, or Microsoft Word

...but also a familiar one

- corpus linguistics and ‘artificial intelligence’ had created a demand for large scale textual resources in academia and beyond

- advances in text processing were beginning to affect lexicography and document management systems (e.g. TeX, Scribe, (S)GML ...)

- the Internet existed for academics and for the military; theories about how to use it ‘hypertextually’ abounded

- books, articles, and even courses in something called "Computing in the Humanities" were beginning to appear

Modelling the data vs modelling the text

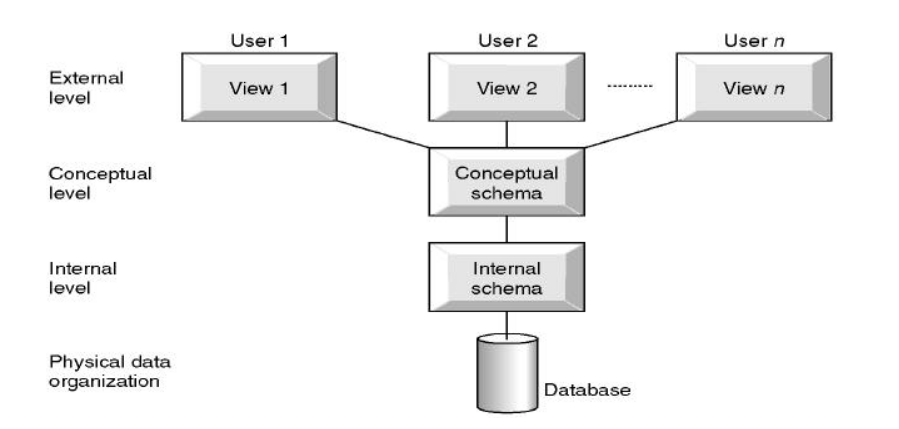

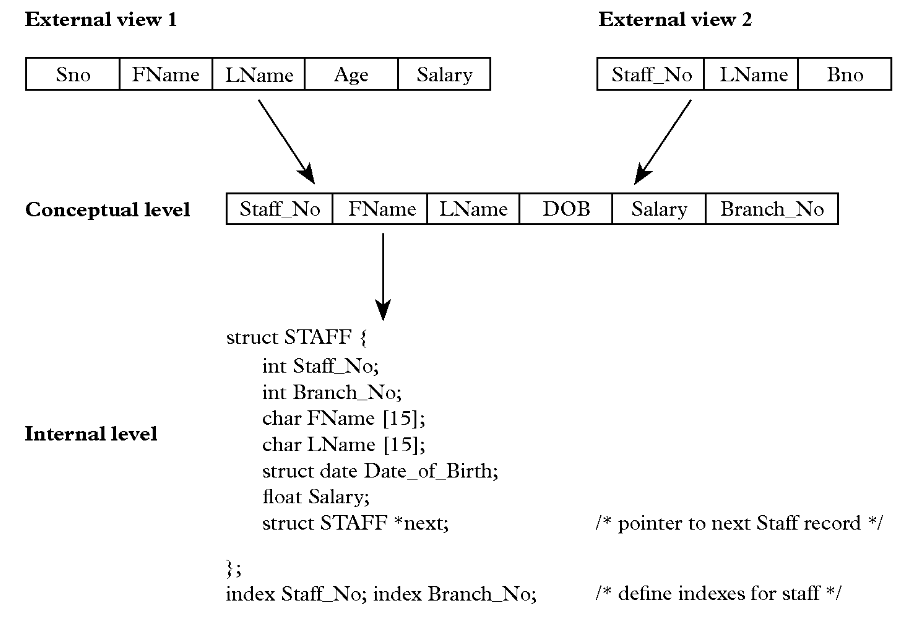

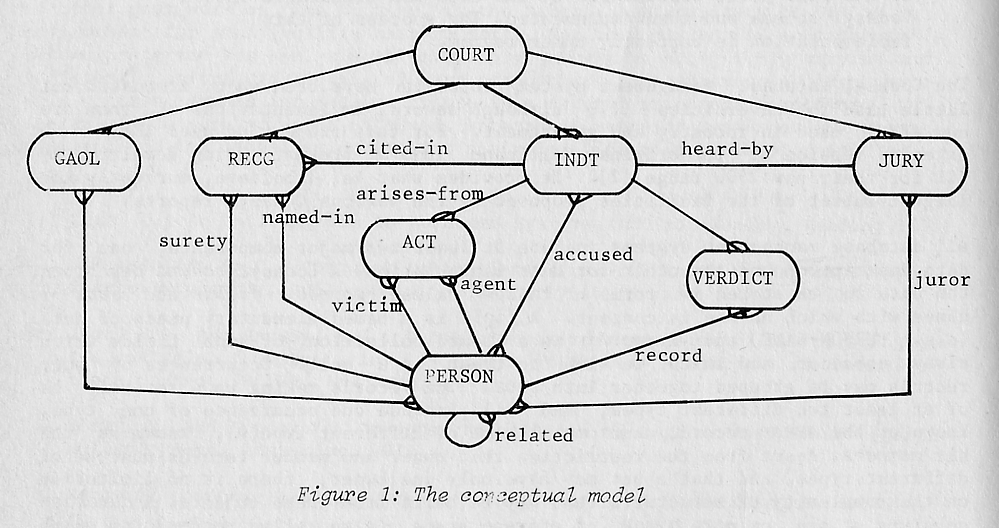

By the end of the 1970s, methods variously called ‘data modelling’, ‘conceptual analysis’, ‘database design’ vel sim. had become common practice.

- remember: a centralised mainframe world dominated by IBM

- spread of office automation and consequent data integration

- ANSI SPARC three level model

An inherently reductive process

how applicable are such methods to the complexity of humanities data sources?

The 1980s were a period of technological enthusiasm

- Digital methods and digital resources, despite their perceived strangeness were increasingly evident in the Humanities

- There was some public funding of infrastructural activities, both at national and European levels: in the UK, for example, the Computers in Teaching Initiative and the Arts and Humanities Data Service

- Something radically new, or just an update ?

- Humanities Computing (aka Digital Humanities) gets a foothold, by establishing courses

- Thaller (in 1989) challenges advocates of ‘Humanities Computing’ to define its underlying theory

- Unsworth and others (by 2002) start using the phrase ”scholarly primitives” to characterise a core set of procedures sustaining something called ‘Digital Humanities’

Where did these digital methods originally thrive?

- corpus linguistics

- authorship and stylometry

- historical data

Corpus Linguistics : searching for meaning

How do we identify the components of a discourse which give it meaning ?

- meaning is usage: ‘Die Bedeutung eines Wortes ist sein Gebrauch in der Sprache’ (Wittgenstein, 1953)

- meaning is collocation ‘You shall know a word by the company it keeps’ (Firth, 1957)

the text is the data

Stylometrickery

- text as a bag of words

- statistical analysis of word frequencies to determine authorship and quantify style

- from T C Mendenhall (1887) to J F Burrows (1987)

- ... now reborn as distant reading, culturonomics

the text is the data

The re-invention of quellenkritik

‘History that is not quantifiable cannot claim to be scientific’ (Le Roy Ladurie 1972)

- In the UK, a series of History and Computing (1986-1990) conferences showed historians already using commercial DBMS, data analysis tools developed for survey analysis, "personal database systems" ...

- In France, J-P Genet and others influenced by the Annales school proposed a programme of digitization for historical sources records

- Further pursued by Manfred Thaller with the program kleio (1982) -- a tool for transcribing and analysing (extracts from) historical sources, which included annotation of their content/significance

the data is extracted from the text

How should we model textual data?

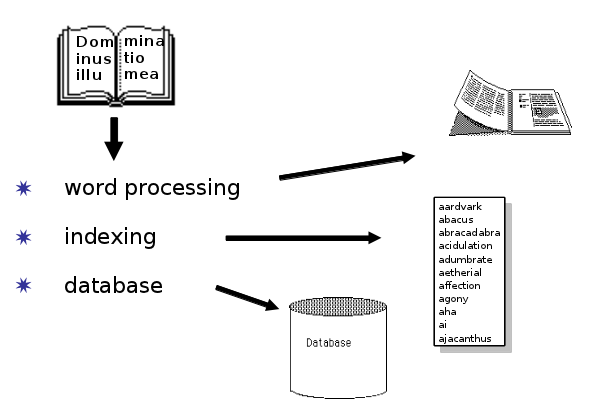

In interpreting text, the trained human brain operates quite successfully on three distinct levels; not surprisingly, three distinct types of computer software have evolved to mimic these capabilities.

Text is little boxes

- TeX was developed by Donald Knuth, a Stanford mathematician, to produce high quality typeset output from annotated text

- Knuth also developed the associated idea of literate programming: that software and its documentation should be written and maintained as an integrated whole

- TeX is still widely used, particularly in the academic community: it is open source and there are several implementations

Database orthodoxy

- identify important entities which exist in the real world and the relationships amongst them

- formally define a conceptual model of that universe of discourse

- map the conceptual model to a storage model (network, relational, whatever...)

But what are the "important entities" we might wish to identify in a textual resource?

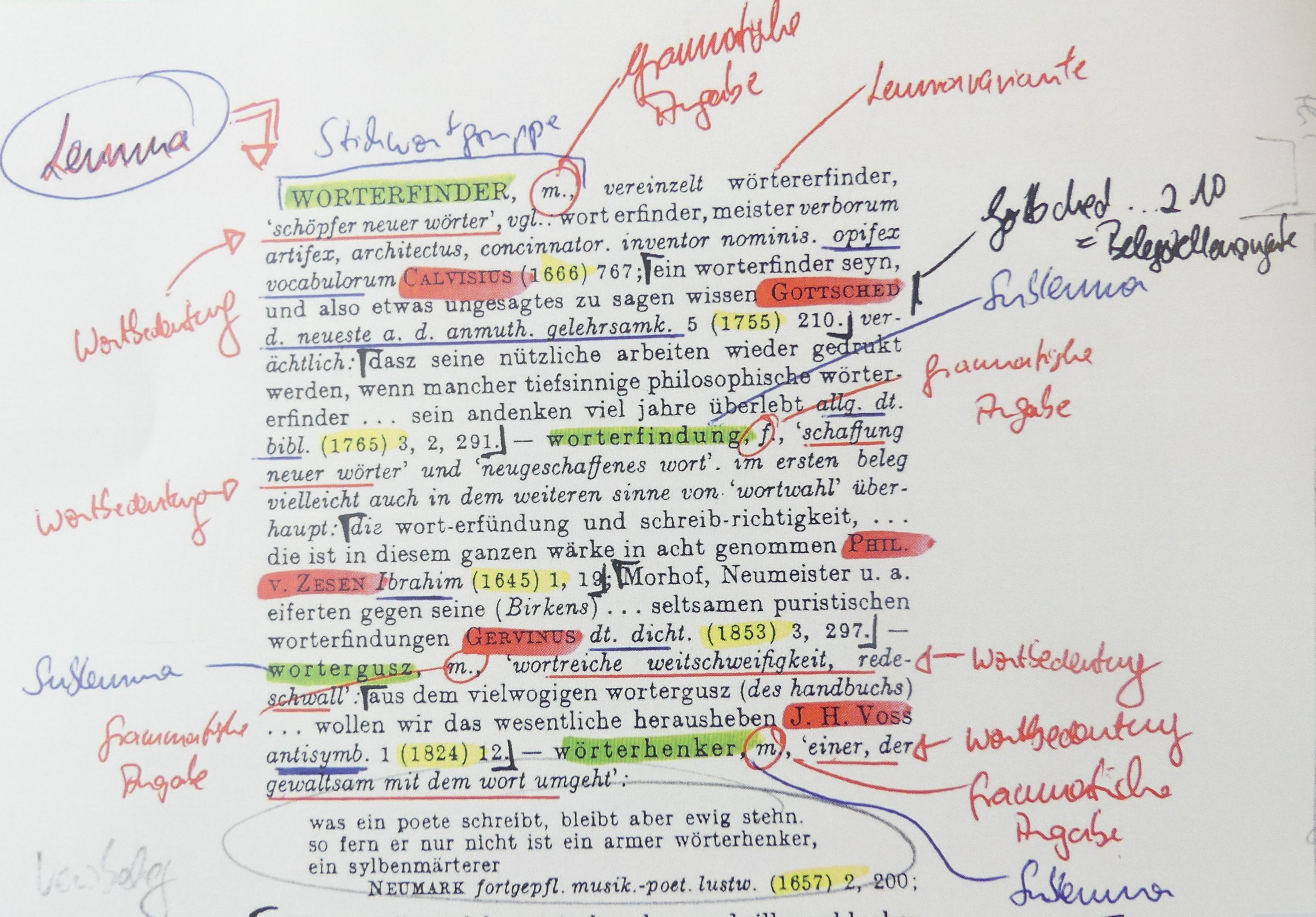

Assize court records, for example

What is a text (really)?

- content: the components (words, images etc). which make up a document

- structure: the organization and inter-relationship of those components

- presentation: how a document looks and what processes are applied to it

- context: how the document was produced, circulated, processed, and understood

- .. and possibly many other readings

Separating content, structure, presentation, and context means :

- the content can be re-used

- the structure can be formally validated

- the presentation can be customized for

- different media

- different audiences

- the context can be analysed

- in short, the information can be uncoupled from its processing

This is not a new idea! But is it a good one?

Some ambitious claims ensued

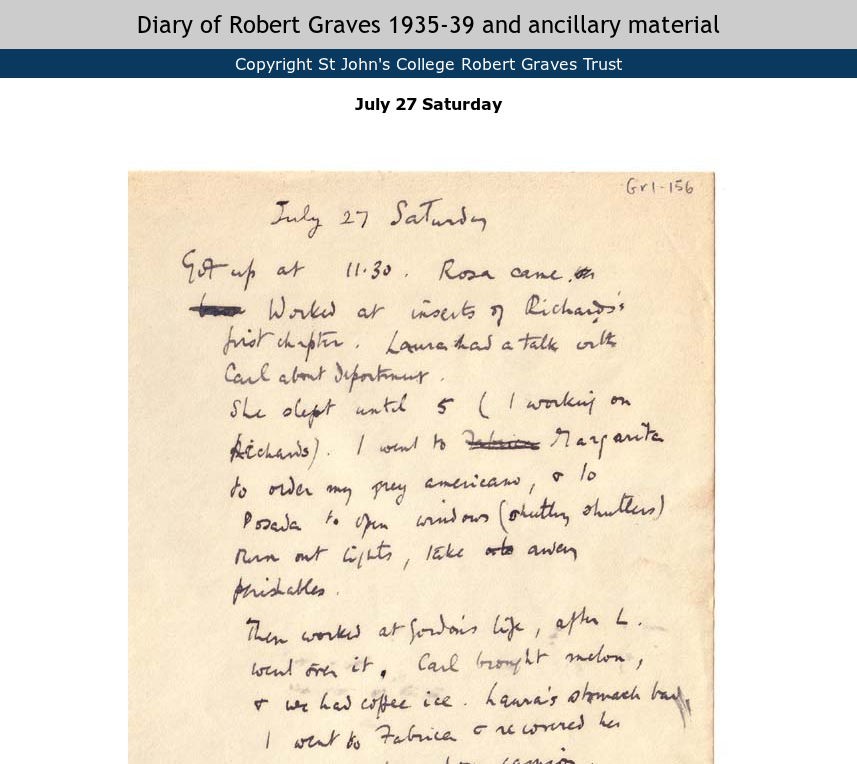

A digital text may be ...

a ‘substitute’ (surrogate) simply representing the appearance of an existing document

... or it may be

a representation of its linguistic content and structure, with additional annotations about its meaning and context.

Functions of encoding

- It makes explicit to a processor how something should be processed.

- In the past, ‘markup’ was what told a typesetter how to deal with a manuscript

- Nowadays, it is what tells a computer program how to deal with a stream of textual data.

... and thus expresses the encoder's view of what matters in this document, determining how it can subsequently be analysed.

Which textual data matters ?

- the shape of the letters and their layout?

- the presumed creator of the writing?

- the (presumed) intentions of the creator?

- the stories we read into the writing?

A ‘document’ is something that exists in the world, which we can digitize.

A ‘text’ is an abstraction, created by or for a community of readers, which we can encode.

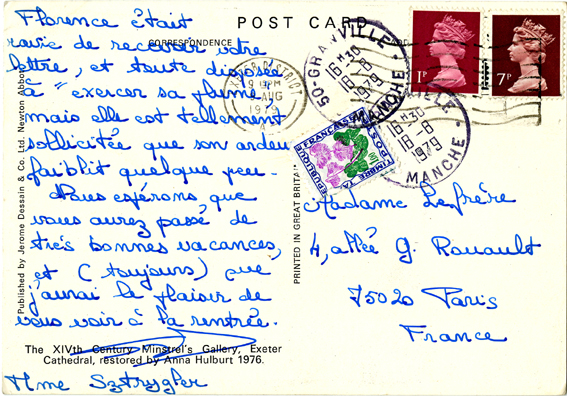

The document as ‘Text-Bearing Object’(TBO)

Materia appetit formam ut virum foemina

- Traditionally, we distinguish form and content

- In the same way, we might think of an inscription or a manuscript or even a transcribed recording as the bearer or container or form instantiating an abstract notion -- a text

- but quite a lot of the text is actually all in our head

And don't forget ... digital texts are also TBOs!

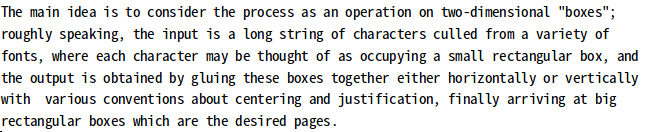

Markup is a scholarly activity

- The application of markup to a document is an intellectual activity

- Deciding exactly what markup to apply and why is much the same as editing a text

- Markup is rarely neutral, objective, or deterministic : interpretation is needed

- Because it obliges us to confront difficult ontological questions, markup can be considered a research activity in itself

- Good textual encoding is never as easy or quick as people would believe -- do things better, not necessarily quicker

- The markup scheme used for a project should result from a detailed analysis of the properties of the objects the project aims to use or create and of their historical/social context

... though considerations of scale may have an effect ...

Because ...

Good markup (like good scholarship) is expensive

Choices (1)

Consider this kind of object:

Some typical varieties of curated markup

<hi rend="dropcap">H</hi>

<g ref="#wynn">W</g>ÆT WE GARDE

<lb/>na in gear-dagum þeod-cyninga

<lb/>þrym gefrunon, hu ða æþelingas

<lb/>ellen fremedon. oft scyld scefing sceaþe

<add>na</add>

<lb/>þreatum, moneg<expan>um</expan> mægþum meodo-setl

<add>a</add>

<lb/>of<damage>

<desc>blot</desc>

</damage>teah ...

<lg>

<l>Hwæt! we Gar-dena in gear-dagum</l>

<l>þeod-cyninga þrym gefrunon,</l>

<l>hu ða æþelingas ellen fremedon,</l>

</lg>

<lg>

<l>Oft <persName>Scyld Scefing</persName> sceaþena þreatum,</l>

<l>monegum mægþum meodo-setla ofteah;</l>

<l>egsode <orgName>Eorle</orgName>, syððan ærest wearþ</l>

<l>feasceaft funden...</l>

</lg>

... and

<s>

<w pos="interj" lemma="hwaet">Hwæt</w>

<w pos="pron" lemma="we">we</w>

<w pos="npl" lemma="gar-denum">Gar-dena</w>

<w pos="prep" lemma="in">in</w>

<w pos="npl" lemma="gear-dagum">gear-dagum</w> ...

</s>

or even

<w pos="npl" corresp="#w2">Gar-dena</w>

<w pos="prep" corresp="#w3">in</w>

<w pos="npl" corresp="#w4">gear-dagum</w>

<w xml:id="w2">armed danes</w>

<w xml:id="w3">in</w>

<w xml:id="w4">days of yore</w>

.. not to mention ...

<l>Oft <persName ref="https://en.wikipedia.org/wiki/Skj%C3%B6ldr">Scyld Scefing</persName>

sceaþena þreatum,</l>

or even

<l>Oft <persName ref="#skioldus">Scyld Scefing</persName> sceaþena þreatum,</l>

<person xml:id="skioldus">

<persName source="#beowulf">Scyld Scefing</persName>

<persName xml:lang="lat">Skioldus</persName>

<persName xml:lang="non">Skjöld</persName>

<occupation>Legendary Norse King</occupation>

<ref target="https://en.wikipedia.org/wiki/Skj%C3%B6ldr">Wikipedia entry</ref>

</person>

Choices (2)

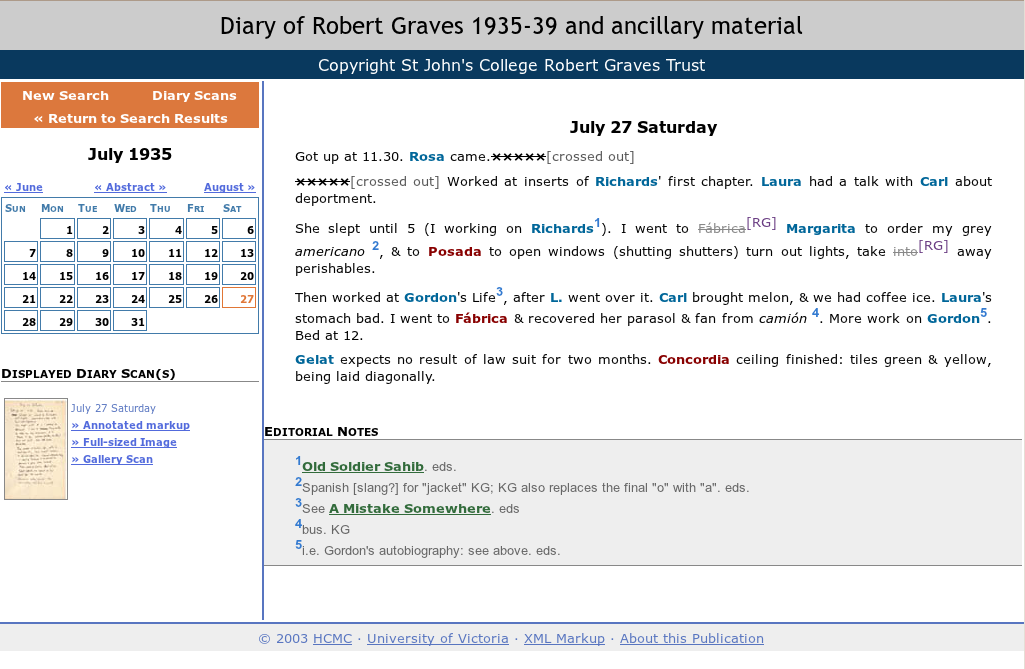

How about this kind of object ...

The digital library model

What can you can do with a million books?

- Text is a bunch of page images backed up with OCR-generated transcription

- analysable only as a bag of words

- A mass of bibliographical data

- begging the representativeness question

Distant Reading

"Designing a text-analysis program is necessarily an interpretative act, not a mechanical one, even if running the program becomes mechanistic." (Joanna Drucker, Why Distant Reading Isn’t(2017)

Choices (3)

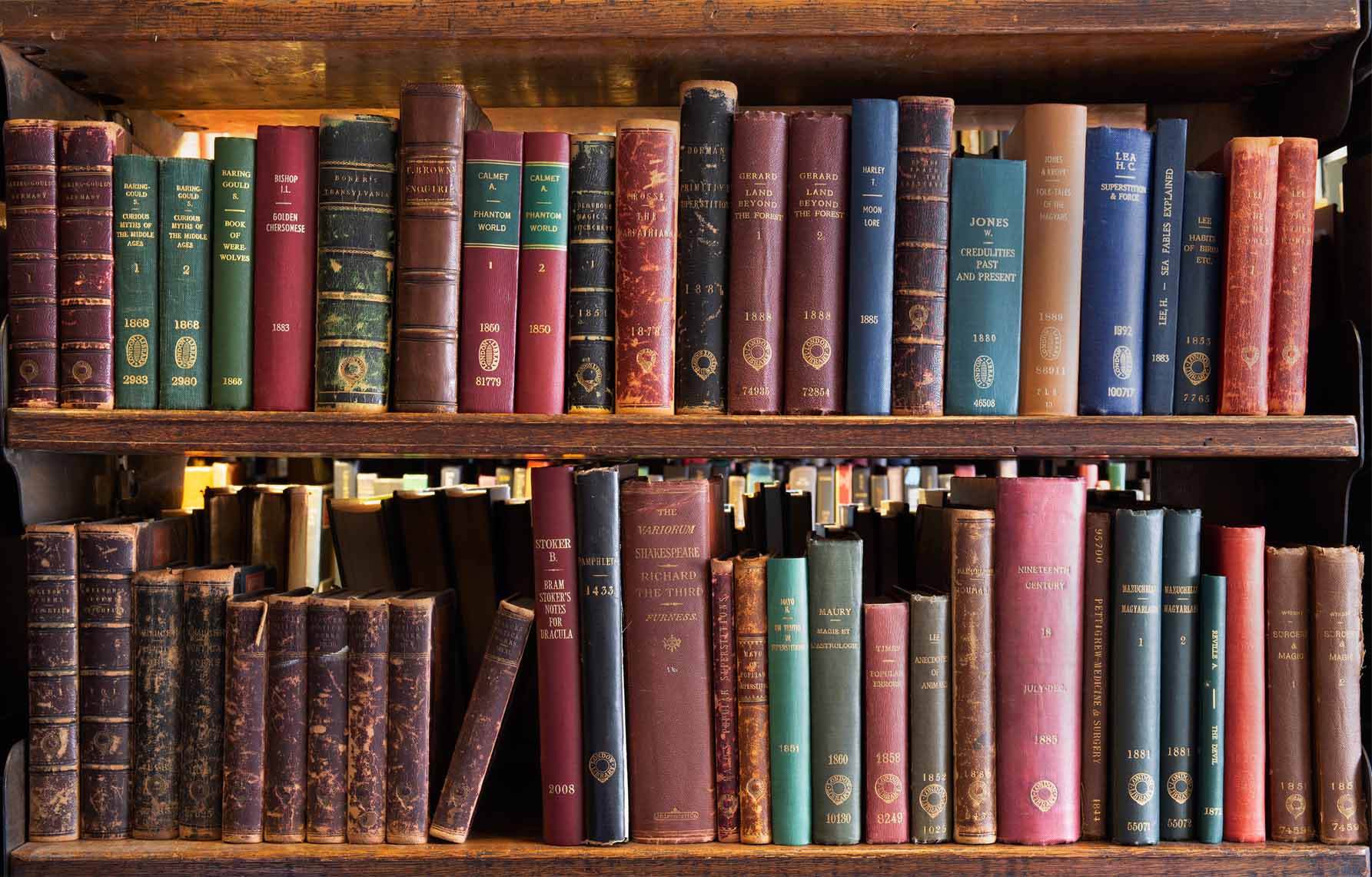

... or this kind of object

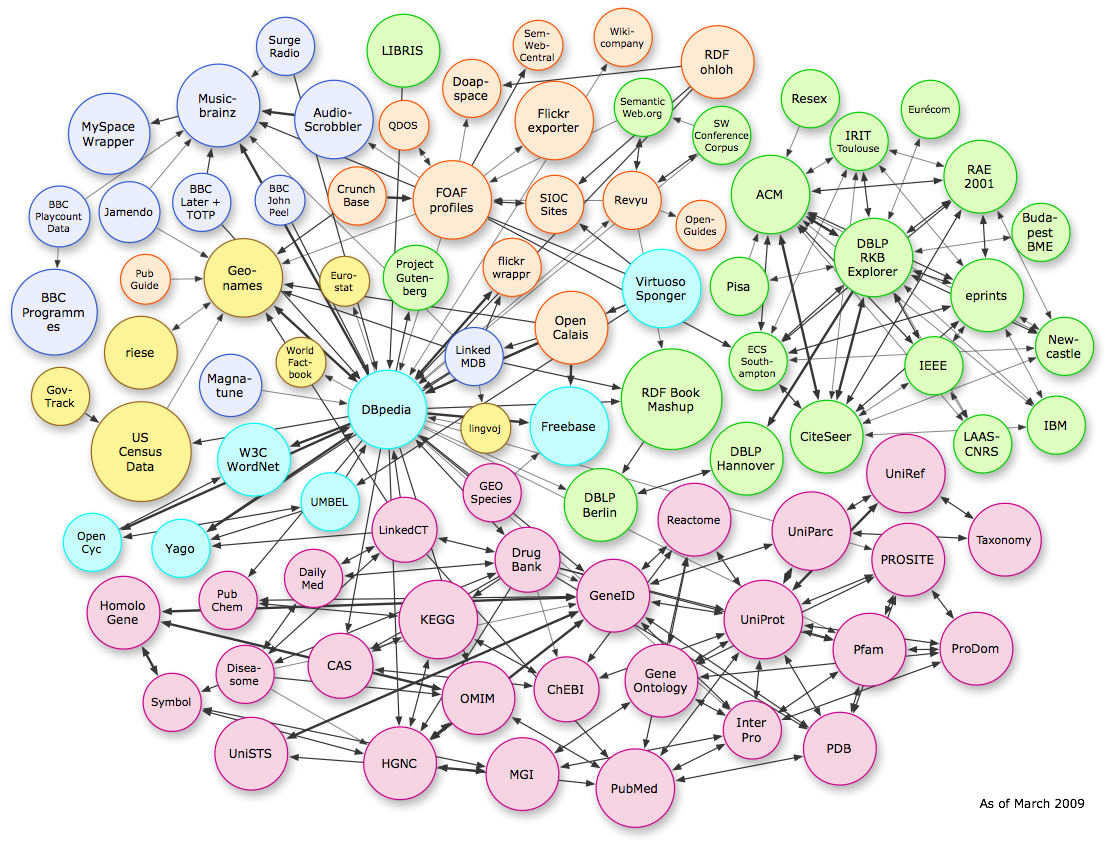

The linked data model

‘LOD creates a store of machine-actionable data on which improved services can be built... facilitate the breakdown of the tyranny of domain silos ... provide direct access to data in ways that are not currently possible ... provide unanticipated benefits that will emerge later ’ (Anon, passim)

LOD is about linking web pages together...

- The "meaning" of a set of TEI documents may be inherently complex, nuanced, internally contradictory, imprecise.

- The "meaning" of a web page supporting a bit of e-commerce is exhausted by its RDF description

Wait ...

- Just how many markup systems/models does the world need?

- One size fits all?

- Let a thousand flowers bloom?

- Roll your own!

- We've been here before...

- one construct and many views

- modularity and extensibility

... did someone mention the TEI ?

Impact and effects of data-driven research

- The trend towards open data motivated (partly) by scientistic replicability

- The digital demotic : opening up of interdisciplinary possibilities -- and the cult of the amateur

- Some specific methodological considerations:

- what are the underlying populations being sampled?

- what kind/s of standardisation work best?

- how reliably can disparate sources be analysed together?

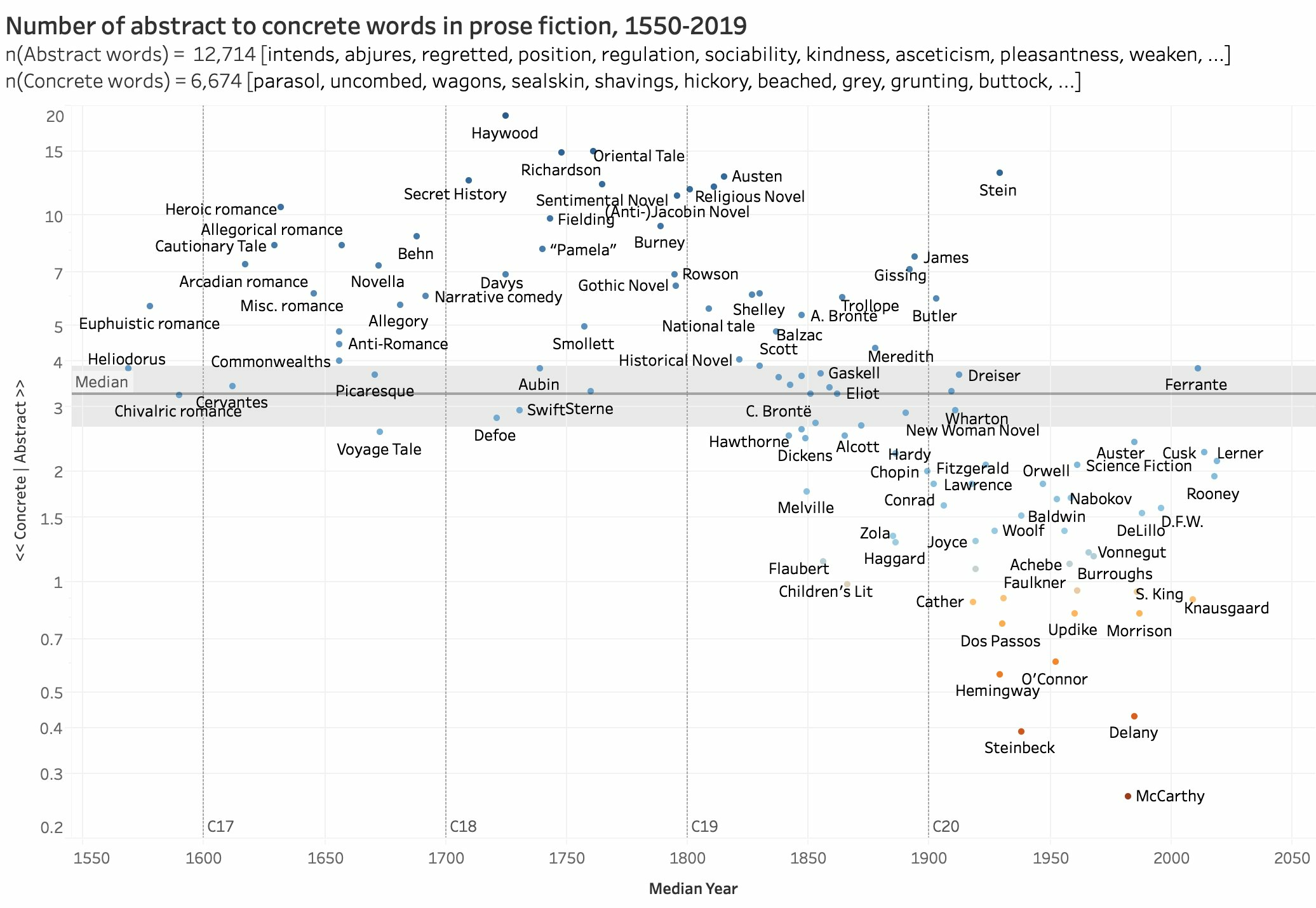

Representativeness ... of what?

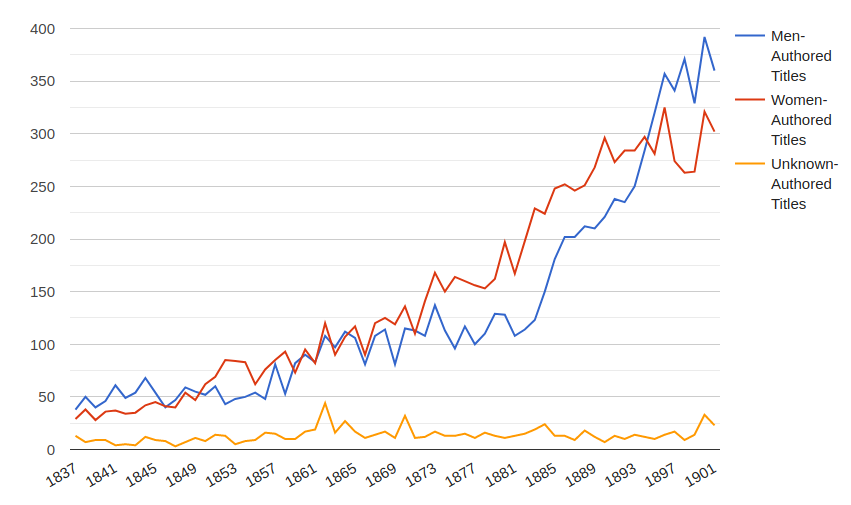

Are there more novels published by men than by women in the 19th century?

How should we create a representative sample of this population?

- by number (1 each from the first decade, 8 each from the last)?

- by variability (1 each from each decade)

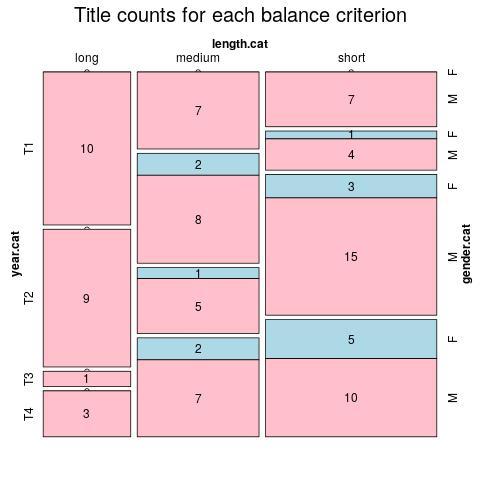

But what if we want to consider multiple categories?

Aiming for a balanced corpus

cultural difference or sampling error?

Is the TEI really an ontology?

- The TEI was originally conceived as a way of modelling the concepts researchers shared about the nature of the texts they wished to process

- Modelling the semantics of that set of concepts formally remains a research project

- Crosswalks or mappings to other "real" ontologies such as OWL are possible: one has been implemented for CIDOC-CRM

- (the TEI provides a hook in the shape of the <equiv> element)

But before we can extract or model their content, documents must be interpreted...

Data science and textual data

.... and (maybe) the same applies to data

"All data is historical data: the product of a time, place, political, economic, technical, & social climate. If you are not considering why your data exists, and other data sets don’t, you are doing data science wrong”

[Melissa Terras, Opportunities, barriers, and rewards in digitally-led analysis of history, culture and society. Turing Lecture 2019-03-03, https://youtu.be/4yYytLUViI4]

A recent example: global reach versus situated context

- A nice simple well-understood domain : digital collections of 19th century newspapers

- all professionally catalogued with extensive metadata

- all (mostly) accessible via standard interfaces

"attempts to create a single map of all possible elements and attributes, and to provide provenance of internal structures while grouping object by type and subtype, raised significant ontological issues" (Beals, M. H. et al The Atlas of Digitised Newspapers and Metadata, 2020)

Different institutional catalogues

- describe the same items with differing degrees of completeness

- use similar but not identical terminology

All institutional collections

- reflect historically situated selection principles

- in particular, selection for digitization is motivated largely by economic considerations

Conclusion

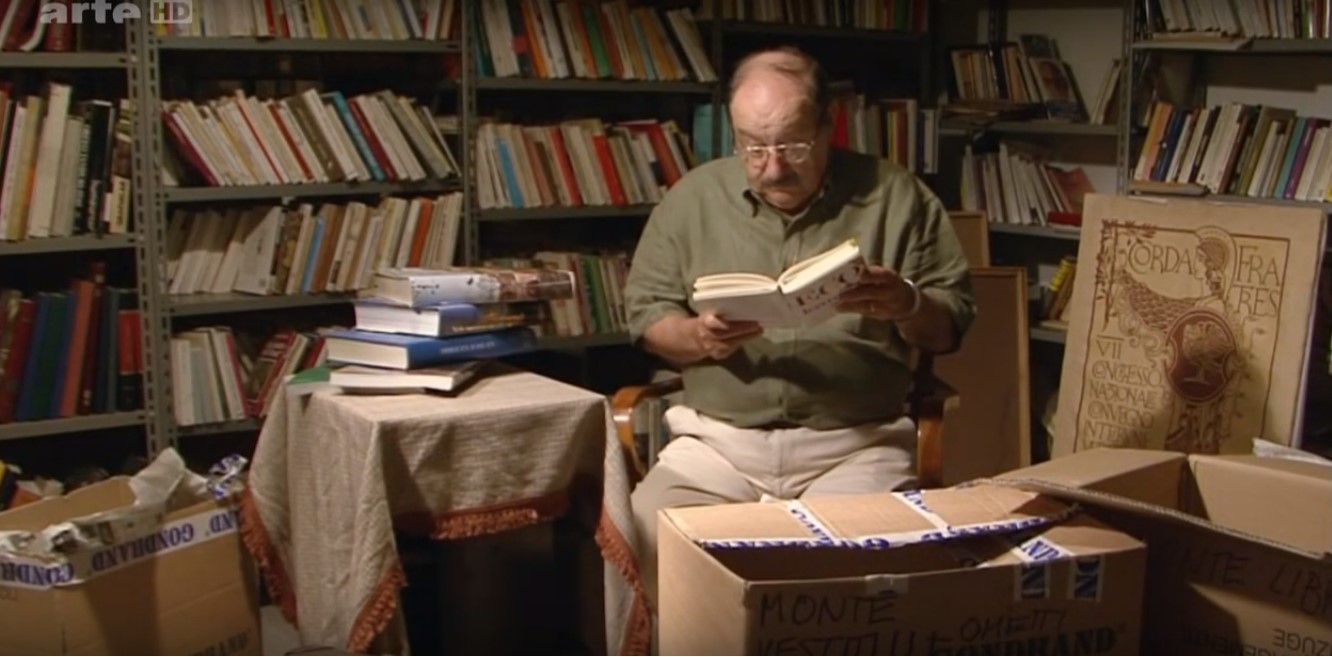

Umberto says:

- In spite of the obvious differences in degrees of certainty and uncertainty, every picture of the world (be it a scientific law or a novel) is a book in its own right, open to further interpretation.

- But certain interpretations can be recognised as unsuccessful because they are like a mule, that is, they are unable to produce new interpretations or cannot be confronted with the traditions of the previous interpretation.

- The force of the Copernican revolution is not only due to the fact that it explains some astronomical phenomena better than the Ptolemaic tradition, but also to the fact that – instead of representing Ptolemy as a crazy liar – it explains why and on which grounds he was justified in outlining his own interpretation

We conclude...

‘Text is not a special kind of data: data is a special kind of text’

![[Put logo here]](media/logo.png)

![[Put logo here]](media/logo.png)